Familiar faces: Has facial recognition tech gone too far?

June 14, 2022 / Wesley Sukh

You ever just…

For whatever reason, have you ever found yourself asking "is this really happening?". You know the feeling, suddenly movie tickets cost like $32, or phones just don't come with chargers anymore. Where suddenly you’re like "I guess this is the new norm then". But those norms…sometimes they just don’t feel right. Well the tech equivalent of that happened to me last night — and surprise surprise, it opens the door to a much larger issue.

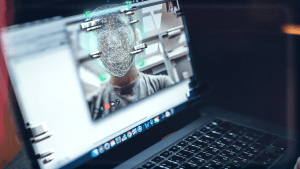

Prior to my own first hand experience, neither myself nor any of the Session team had encountered this pretty wild UI feature. What happened? The Uber app required me to scan my face in order to gain access to rides — and while a lot of people probably scan without thinking, this is a big, BIG deal..

Firstly though, I cannot stress how much I understand the reasoning for this feature — We have a duty of care when it comes to COVID-19, but this article will discuss the implementation of facial recognition technology.

However, access to an essential service being locked behind a system that lacks transparency is kind of... strange, right? At no point during this encounter was I prompted with more than a cursory sentence trying to handwave my privacy concerns away. The capabilities of their scanning technology, the management of my data and the social repercussions associated with this request were not addressed at all. Hence the earlier reaction; "can this be real?".

The Skynet issue

This brings us more or less to the centre of our little conundrum. Facial scanning tech is absolutely booming — we're seeing it become more and more prevalent, particularly in essential services industries. Which, whilst convenient, opens the gateway to very possible dystopian realities.

As I stood there waiting for my lift home, I quickly realised that without agreeing to Uber’s facial scanning technology, that I was barred from the platform which—through some dubious means, in some cases—has quickly become the prime mode of transportation for many people (especially at-risk people who feel that rideshares are a safer alternative to public transportation).

With the rising prevalence of this technology expanding across industries, we’re starting to see its integration into even our daily financial systems and work-flow software — yet this writer definitely thinks that we shouldn't take this software as lightly as it comes, and here's why.

Smile, you're on camera

The integration of facial recognition software in transport services is unfortunately only the tip of a Titanic-sized iceberg. In fact, we're already seeing a growing number of essential services adopting the use of facial recognition tech — which really isn’t something we should allow. It's becoming ever increasingly clear that this shift denotes the next evolution in data mismanagement, and could be the next step down the road of mass surveillance.

Mastercard recently started rolling out one of the creepiest versions of this technology yet... and uh, oh boy. Self-dubbed as ‘the new era of biometrics’, Mastercard is now allowing customers to purchase goods and services using their new ‘Biometric Checkout Program’ — or what we'd prefer to call ‘Creepy Checkout Program’.

"Consumers can simply check the bill and smile into a camera or wave their hand over a reader to pay”.

This excerpt was pulled from their press release announcing the new program, which included less than one paragraph on their long term security considerations. We sure hope that they are paying more attention to privacy and security details behind the scenes — but they really need to be more transparent about how they will handle data privacy and security. In fact if you were thinking that you couldn't find anything on their data management solution or, you know, literally anything about privacy being mentioned: you would be correct.

This does quite eloquently highlight the dissonance of this tech. Facial recognition and biometrics are meant to be all about security, authentication, and trust — but if we aren’t told how and why this tech is even being used, how is that possible?

The idea that access to your financial transactions could become tied to compulsory facial recognition is absurd. Personal data of this sensitivity (and value) should be managed more carefully. The likelihood that this data will be stored on centralised servers or sold for ad revenue is also depressingly high. And who is going to lose the most out of this? The most at-risk, marginalised people in our communities.

You thought it couldn't get worse? lol

At this point in the article we wouldn't blame you if you were reading this and thinking; "yeah, that all makes sense, but dystopian futures — that's a stretch"?

Well, not exactly — and let me show you why.

Whilst both Uber and Mastercard highlighted why we should not integrate facial recognition technology into essential services, it is without a doubt Zoom (you know, 2020's greatest workplace phenomenon) that hammers the final nail in the coffin (Again, not sorry for the cliche).

Ahh Zoom, that cult-like workplace app that crash landed into everyone's lives early-pandemic with a host of security and privacy issues. Well now they’re experimenting with in-call emotional recognition software, using trackers and AI algorithms to collect emotive responses and body language data. Zoom is allegedly collecting this information under the pretence that it will be sold to improve workflow, to benefit consumer sales analysis, and to *checks notes in disbelief* track students' emotional engagement with lecture material . Really, Zoom? Really? Not only is it weird that my sometimes-bath-robed Zoom meetings are being monitored for ‘engagement’ purposes, but this technology challenges almost every digital privacy consideration conceivable — especially when we're talking about minors and students (seriously Zoom, wtf) trying to access essential education services.

This does, however, position us to fully appreciate the gravity of the situation, as the limit of how far data mismanagement can be pushed across compulsory or essential platforms continues to peak. It must be asked, but at what point do we cease to compromise digital privacy for convenience and where does the roll out of these surveillance state-like ideals go from here? Our verdict — nowhere good.

Food for thought

So let’s not normalise the implementation of surveillance security tech. To be clear — surveillance is not security. Surveillance is not privacy. Surveillance is not safety. Yes, we know it's tough — both Uber and Mastercard have cited COVID 19 as justifiable reasoning for the implementation of this tech — and honestly we kinda get it. It can be easy to take shortcuts using things like facial recognition tech — but this time we think it’s better to take the long way round. Look, I may not have the solution for you in this article, but we can promise you it shouldn't involve; barring access to essential transport through facial recognition software, scanning and tracking a consumer's face any time they want a coffee or monitoring the body and facial language of students. This all seemed pretty straight forward to us, but when there's revenue to be made and data to be sold — it never ceases to amaze us just how far big tech is willing to go.

Rotating keys for Session repos

January 22, 2026 / Session

Session Pro Beta update: December 2025

December 07, 2025 / Session

Session Protocol V2: PFS, Post-Quantum and the Future of Private Messaging

December 01, 2025 / Session

Removing screenshot alerts from Session

November 09, 2025 / Session

Session Pro Beta Development Update: Progress and Community Insights

October 30, 2025 / Session

Session Pro Beta: September Development Update

September 23, 2025 / Alex Linton